OpenAI Embraces Google’s AI Chips: A New Era of Accelerated Intelligence

In a move that’s catching the attention of the global tech community, OpenAI has begun leveraging Google’s custom AI chips to power its next-generation models and infrastructure. While OpenAI has long relied on NVIDIA’s GPUs for training and inference, this strategic shift toward Google’s TPU (Tensor Processing Units) signals not just a hardware evolution—but a new era in AI cooperation, performance, and scale.

Why Google’s Chips? Breaking Down the Shift

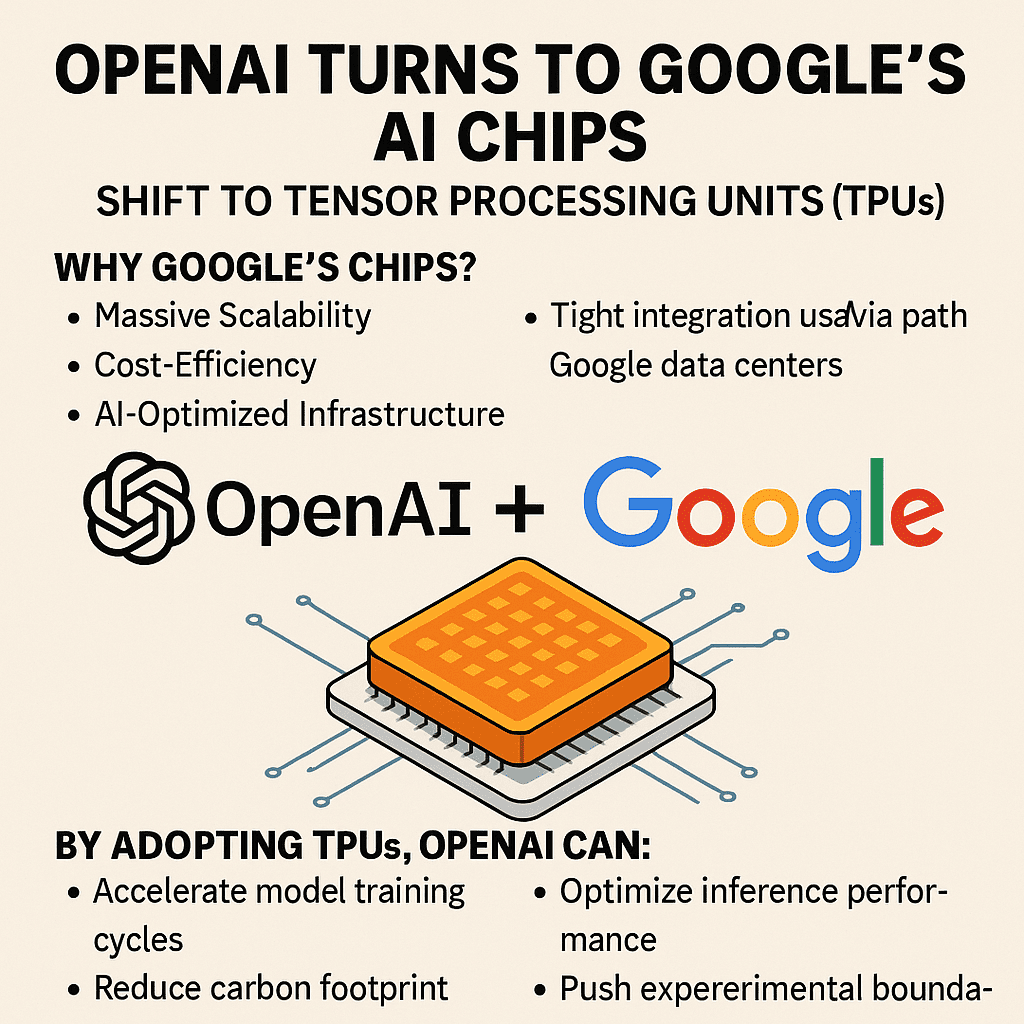

OpenAI’s decision to tap into Google’s AI chip architecture is based on three key motivations:

- Massive Scalability – Google’s TPUs, especially the fifth-generation TPU v5e and upcoming TPU v6, offer massive parallel processing power optimized for deep learning at hyperscale.

- Cost-Efficiency – Compared to the high cost and recent shortage of NVIDIA’s H100 GPUs, TPUs present a more economically viable path for long-term AI development.

- AI-Optimized Infrastructure – Google’s hardware-software ecosystem (TPUs + JAX + Google Cloud AI Platform) is tightly integrated, minimizing friction and boosting speed.

By combining these factors, OpenAI gains both performance and flexibility—two things that are crucial as demand for AI applications surges globally.

OpenAI + Google: A Strategic Alliance, Not a Merger

Contrary to speculation, this is not a corporate acquisition or merger. Rather, OpenAI is partnering with Google Cloud to run specific workloads on TPU-powered infrastructure. Sources familiar with the arrangement confirm that OpenAI still uses Microsoft Azure for most of its GPT models, especially those powering ChatGPT.

However, certain experimental projects and internal research systems are now partially hosted on Google Cloud’s TPU stack—a sign that OpenAI is intentionally diversifying its compute backend to reduce dependency on a single vendor.

This diversification is not just smart—it’s strategic. In an industry driven by speed, power, and uptime, multi-cloud resilience can offer an edge over competitors who are locked into a single provider.

The AI Race: From Silicon to Superintelligence

This move also sheds light on a bigger trend: AI compute is now the most valuable currency in innovation. In 2023 and 2024, the shortage of NVIDIA GPUs led to delays, cost hikes, and limited model access for AI startups and developers. As demand for AI models like GPT-5, DALL·E, and Sora rises, so does the need for stable and scalable chip access.

By adopting Google’s TPUs, OpenAI can:

- Accelerate model training cycles

- Optimize inference performance

- Reduce carbon footprint using Google’s sustainability-focused data centers

- Push experimental boundaries without overloading Azure clusters

This evolution may also pave the way for OpenAI to experiment with custom chip integrations, possibly co-developing hardware down the line—an idea that has floated since 2023.

Behind the Scenes: What Are TPUs, and Why Do They Matter?

Tensor Processing Units (TPUs) are application-specific integrated circuits (ASICs) designed by Google for machine learning tasks. Unlike general-purpose GPUs, TPUs are tailored for matrix-heavy calculations—a core component of training and running large language models.

The latest TPU versions deliver:

- Over 100 petaflops per pod

- Improved memory bandwidth and precision

- Seamless support for Google’s JAX and TensorFlow ecosystems

- Massive parallelism that speeds up model convergence

Simply put: TPUs are engines built specifically for AI, making them ideal for foundation model training and deployment.

What This Means for Developers, Users & the Industry

For everyday users, this could mean:

- Faster response times in ChatGPT and OpenAI APIs

- More frequent model updates

- Improved uptime and scalability during peak traffic

- Lower energy use = Greener AI

For developers and enterprise clients, the implication is broader: OpenAI’s tools could soon offer better latency, throughput, and global accessibility, especially as they offload some workloads to Google’s robust infrastructure.

Competitive Implications: Microsoft, NVIDIA, and the New Balance

This partnership does raise eyebrows within OpenAI’s long-time ally Microsoft, who invested billions in the startup. However, OpenAI remains committed to Azure as its core cloud partner.

Instead of replacing one with the other, OpenAI appears to be building a multi-vendor ecosystem, using the best-of-breed resources from Microsoft, Google, and NVIDIA where they perform best.

This could become a template for AI companies: a future where no single chipmaker or cloud platform holds the monopoly on artificial intelligence development.

Conclusion: OpenAI’s Chip Strategy Is a Sign of Things to Come

OpenAI’s turn to Google’s TPUs is more than a tech update—it’s a signal of how the AI landscape is shifting. In a world where innovation is bottlenecked by compute access, companies must be agile, diversified, and performance-focused.

By tapping into Google’s AI muscle while maintaining its ties with Microsoft and NVIDIA, OpenAI isn’t just accelerating its own models—it’s building an AI infrastructure blueprint that could define the next decade of artificial intelligence.